Some research that I was involved in was published last week in the Journal of the Audio Engineering Society [1]. You can download it from the JAES e-library here. The research was led by Etienne Hendrickx (currently at Université de Bretagne Occidentale) and was a follow on from other work we did together on head-tracking with dynamic binaural rendering [2, 3, 4].

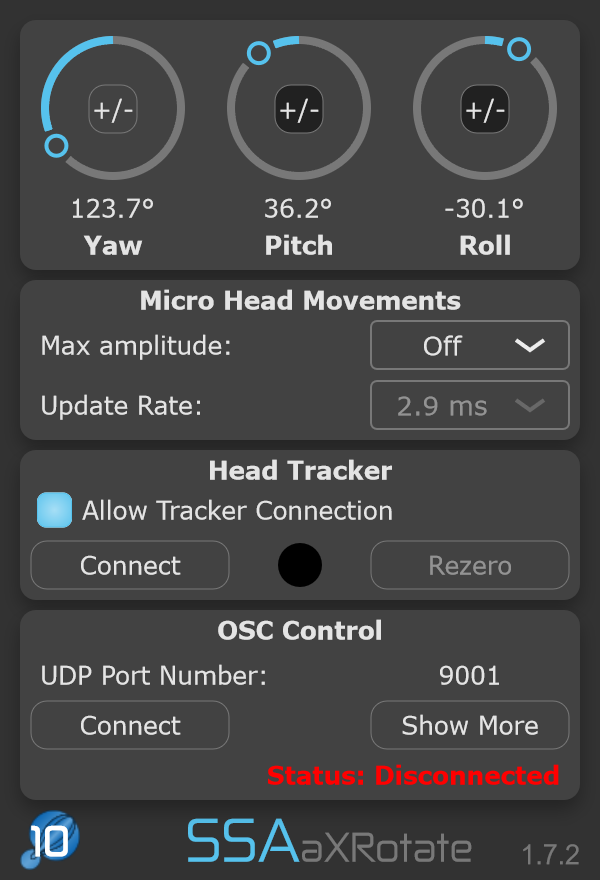

The new study looked at externalisation (the perception that a sound played over headphones is emanating from the real work, not inside the listener’s head). It specifically investigated the worst case scenario for externalisation – sound sources directly in-front of ($0^{circ}$) or behind the listener ($180^{circ}$). It tested the benefit of listeners moving their head, as well as listeners keeping their head still and the binaural source following a “head movement-like” trajectory. Both were found to give some improvement to the perceived externalisation, with head movement providing the most improvement.

The fact that source movements can improve externalisation is important because we don’t always have head tracking systems. Most people will experience binaural with normal headphones. This hints at a direction for some “calibration” to help the listener get immersed in the scene, improving their overall experience.

Also importantly, the listeners used in the study were all new to listening to binaural content. This is important because lots of previous studies use expert listeners, but the vast majority of real-world listeners are not experts! The results of this paper are encouraging because they show that you don’t need hours of listening to binaural to benefit from some instant perceptual improvement in a fairly easy manner.

References

[1] E. Hendrickx, P. Stitt, J. Messonnier, J.-M. Lyzwa, B. F. Katz, and C. de Boishéraud, “Improvement of Externalization by Listener and Source Movement Using a ‘Binauralized’ Microphone Array,’” J. Audio Eng. Soc., vol. 65, no. 7, pp. 589–599, 2017. link

[2] E. Hendrickx, P. Stitt, J.-C. Messonnier, J.-M. Lyzwa, B. F. Katz, and C. de Boishéraud, “Influence of head tracking on the externalization of speech stimuli for non-individualized binaural synthesis,” J. Acoust. Soc. Am., vol. 141, no. 3, pp. 2011–2023, 2017. link

[3] P. Stitt, E. Hendrickx, J.-C. Messonnier, and B. F. G. Katz, “The Role of Head Tracking in Binaural Rendering,” in 29th Tonmeistertagung – VDT International Convention, 2016, pp. 1–5. link

[4] P. Stitt, E. Hendrickx, J.-C. Messonnier, and B. F. G. Katz, “The influence of head tracking latency on binaural rendering in simple and complex sound scenes,” in Audio Engineering Society Convention 140, 2016, pp. 1–8. link